Optimizing the Parameters of Pipelined Multi-party Computation for Privacy-Preserving Machine Learning Applications

Abstract:

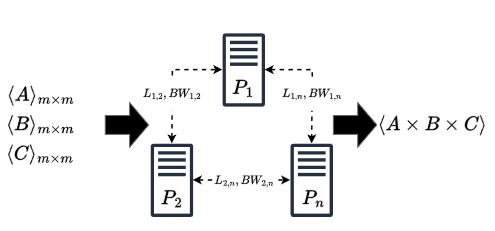

Cloud Service Providers (CSPs) have recently significantly improved, allowing for outsourcing Machine Learning (ML) training and inference. However, due to the data privacy needs in most of the ML applications, several privacy-preserving technologies, such as Multi-party Computation (MPC), have been proposed to protect the data privacy. MPC offers splitting and exchanging of data among multiple parties, typically managed on cloud environments. Although MPC performs better than other alternatives, it still lags behind regular clear-text ML processing in terms of performance. To reduce the execution time of Privacy-Preserving ML (PPML) via MPC, parallelization of computation and communication among the parties (i.e., pipelined MPC), can be employed. However, the complex nature of these systems makes it challenging to select an optimal network and node configuration for executing a pipelined MPC. To address these challenges, in this paper, we propose a Multi-Objective Optimization (MOO) model focusing on achieving optimal configuration to minimize the MPC execution time along with its costs. We formulate an optimization model and propose two distinct approaches to solve it. Our evaluation clearly demonstrates a reduction in execution time and cost with respect to regular MPC execution. The evaluation results also provide valuable insights into the impact of latency and bandwidth considerations on our system’s performance, contributing to PPML optimization.